Read About The Hidden Costs, Challenges, and Total Cost of Ownership of Generative AI Adoption in the Enterprise as Well as C-level Key Considerations, Challenges and Strategies for Unleashing AI at Scale

ClearML recently conducted two global survey reports with the AI Infrastructure Alliance (AIIA) on the business adoption of Generative AI. We surveyed 1,000 AI Leaders and C-level executives in charge of spearheading Generative AI initiatives within their organizations. Both survey reports shed light on the adoption, economic impact, and significant challenges these professionals face in unleashing Generative AI’s potential at scale.

In our first report, “Enterprise Generative AI Adoption: C-Level Key Considerations, Challenges and Strategies for Unleashing AI at Scale,” we found that while the majority of respondents said they need to scale Generative AI, they also said they lacked the budget, resources, talent, time, and technology to do so. Given AI’s force-multiplier effect on revenue, new product ideas, and functional optimization, it’s clear that critical resource allocation is needed for companies to effectively invest in AI to transform their organization. Key challenges in Generative AI adoption within the enterprise include:

- Managing overall running and variable costs at scale

- Having complete oversight and understanding of LLM performance

- Hiring human capital and the lack of availability of specialized talent

- Improving efficiency and productivity while managing costs and TCO

- Increasing governance and visibility

Key Findings from the First Survey and Research Report

Enterprise Adoption by Department and Business Unit

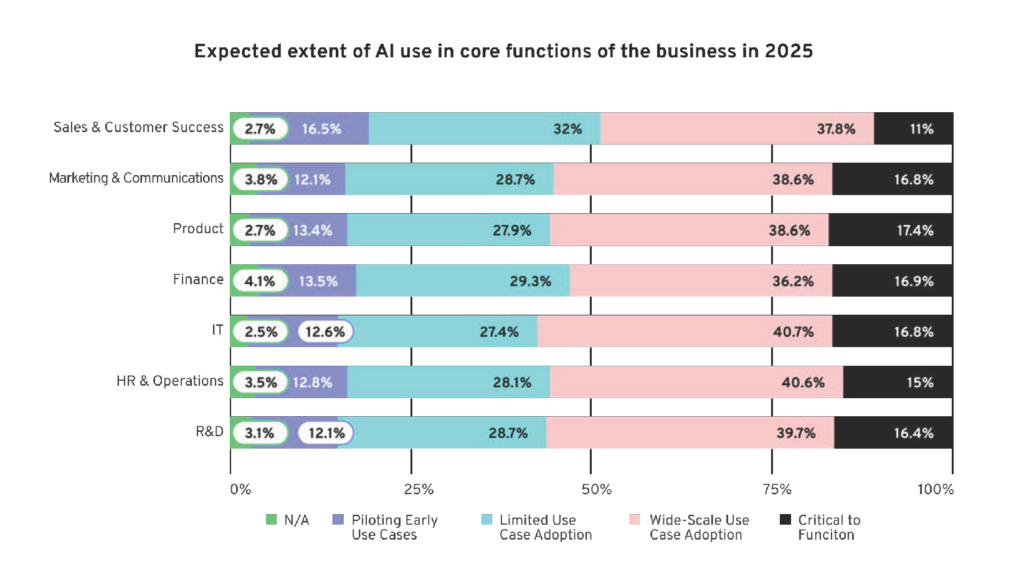

When asked what is the expected extent of Generative AI use in core functions of their business in 2025, the majority of respondents believed the following departments will have limited, wide-scale, or critical use case adoption by 2025:

Plans for Adoption

- 81% of respondents consider unleashing AI and machine learning for creating business value as a critical top priority.

- 78% of enterprises plan to adopt xGPT / LLMs / generative AI during 2023, and an additional 9% plan to start adoption in 2024.

Expectations for Revenue

- 57% of respondents’ boards expect a double-digit increase in revenue from AI/ML investments in the coming fiscal year, while 37% expect a single-digit increase.

Resource Constraints & Impacts

- 59% of C-level leaders lack the necessary budget and resources for successful Generative AI adoption, hindering value creation.

- 66% of respondents face challenges in quantifying the impact and ROI of their AI/ML projects on the bottom line due to underfunding and understaffing.

- 42% need more expert machine learning personnel to ensure success.

Enterprise Challenges & Blockers

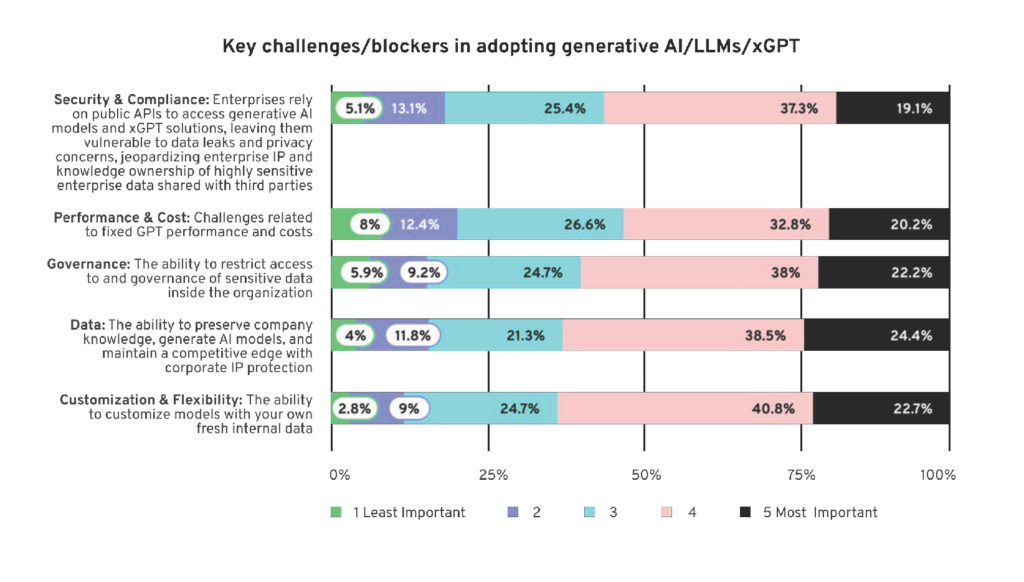

When asked to rate what are the key challenges and blockers in adopting generative AI / LLMs / xGPT solutions across their organization and business units, respondents rated five key challenges as most important:

Platform Standardization

- 88% of respondents indicated their organization is seeking to standardize on a single AI/ML platform across departments versus using different point solutions for different teams.

Download Our Gen AI Adoption Research Report

In our second report, “The Hidden Costs, Challenges, and Total Cost of Ownership of Generative AI Adoption in the Enterprise,” we put our finger on the various considerations of the hidden costs and unknowns of Generative AI business adoption. We tried to unpack how AI leaders are navigating the uncharted territory of hidden operating costs related to Generative AI, which are often described as unfamiliar and unpredictable. We also explored how global organizations plan to balance Gen AI investments with expected outcomes and their overall running and variable costs.

Our findings show that it is essential for organizations and AI leadership to develop an effective, strategic approach to calculating, forecasting, and containing these costs tailored to their own organization and its unique business use cases. Hence, we chose to identify how confident AI leaders and C-level executives feel about accurately predicting and forecasting the TCO and ROI for Gen AI in their organizations while considering key factors and cost drivers such as setup, training, maintenance, running costs, specific use cases, and variable costs such as compute.

Key Findings from the Second Survey and Research Report

1) Blind spot: respondents are not considering the total costs of Generative AI

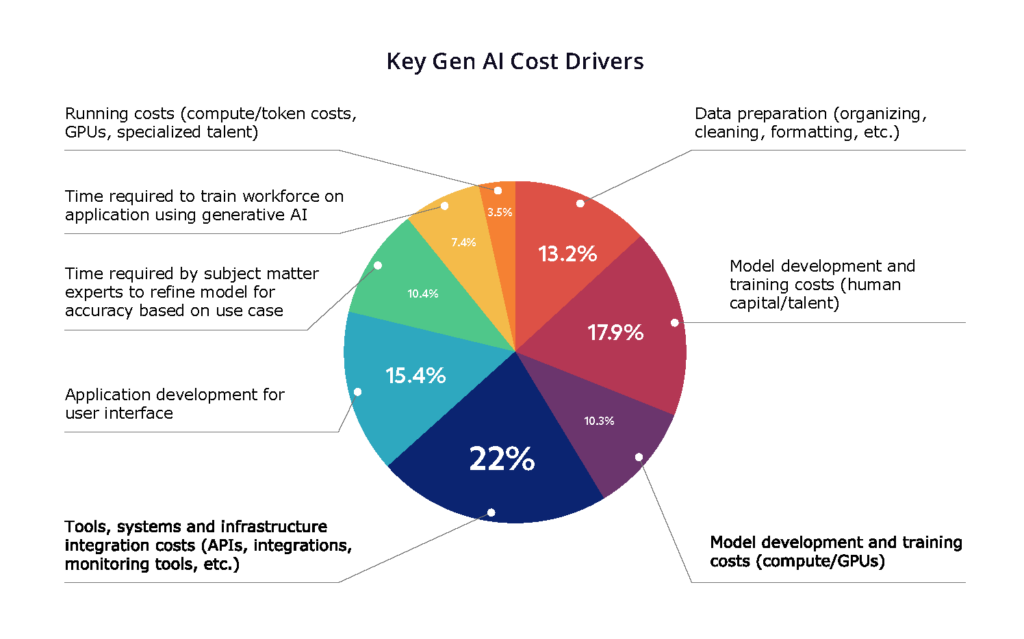

Based on survey answers, we found that most respondents believe their Gen AI costs are centered around model development, training, and systems infrastructure. For example, the costs associated with how a model works – human capital, the tools and systems to run it, and the app/UI for users. Unfortunately, the reality is quite different. We believe respondents are underestimating how messy data can be and the heavy lifting needed for data prep. It’s worth noting that this is even more challenging if their company is using AI as a Service (i.e. using an API to connect to a LLM such as OpenAI ChatGPT, Google Bard, or Cohere™ Generate). Similarly, respondents are underestimating the time required by SMEs to work with the engineering team to ensure the model is accurate and “good enough” to roll out. Most importantly, a shockingly low 8% of respondents said they would attempt to control their budget by limiting models and/or access to Gen AI to better manage their budgets, which means they are not thinking about running costs, which we expect is going to be a huge surprise for them given their pivotal impact on TCO as a pricey cost driver.

2) Unrealistic expectations: most companies want to implement and run Gen AI themselves

Nearly every respondent (91%) plans to resource or staff in-house to support future Gen AI efforts. That’s bad news for consultants who all seem to be building Gen AI capabilities into their talent pool, but it does lead us to believe that organizations are considering scaling Gen AI for the long haul. However, that requires some serious cost considerations for how they are budgeting going forward and how to be most efficient using their budgets year-over-year. They may well be overestimating how much they can do with their budget, particularly in light of the findings above. It’s interesting that 21% of respondents want to use their existing team, which means finding more ways to scale themselves efficiently to do more with less — or just produce fewer models.

3) Critical prioritization: the key to selecting use cases for implementation within budget

While 82% of respondents are considering 4-9 use cases for their organization with end users ranging from 501-5,000, an alarmingly low 20% of respondents have allocated an annual budget of more than $2 million. That is worrying, as according to ClearML’s TCO calculator the first year of training, fine-tuning, and serving a model for 3,000 employees hovers around $1 million (depending on data corpus and use case) using an in-house team, with future economies of scale possible through shared compute usage.

Meanwhile, 32% of respondents reported they are currently using ChatGPT, and these respondents are likely to find scaling across their business quite expensive, as costs grow linearly with token usage. We’re hard-pressed to understand how this usage will ultimately fit within estimated future budgets, another gap between vision and reality.

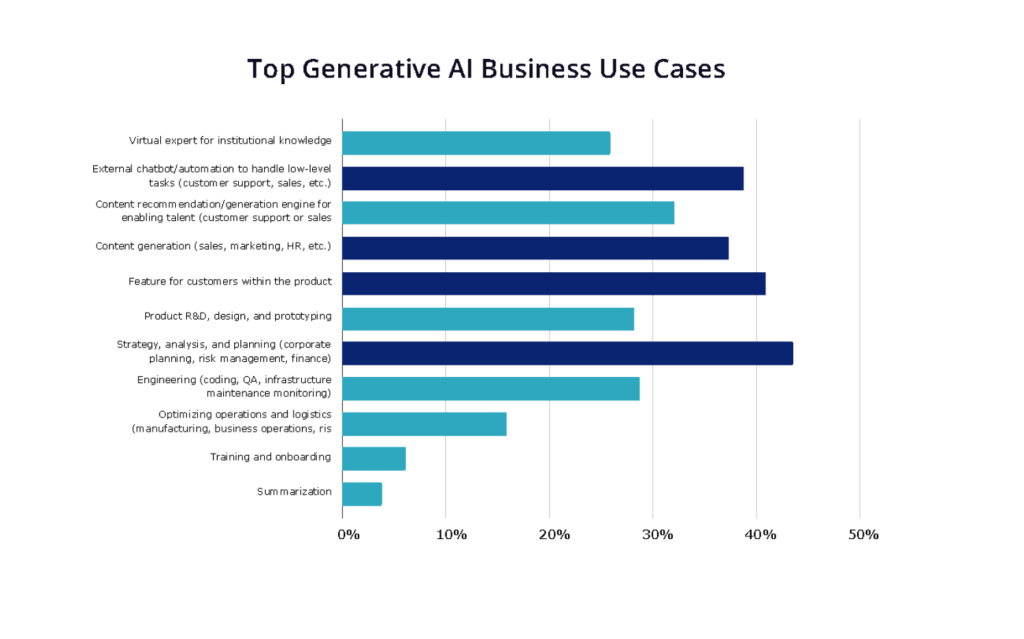

4) One size does not fit all: organizations will need a wide variety of Gen AI tools and models

We found that 37% of respondents plan to use Gen AI for content generation, which can be accomplished with popular out-of-the-box single use case applications such as Jasper™ and Copy.ai or available LLM APIs such as Cohere™ Generate. This use case is the least expensive to address, as organizations can simply purchase off-the-shelf apps with a low-cost subscription per user. Best of all, there is no need for organizations to share proprietary data with the app developer, so it is also a low-risk activity, one that is easy to outsource.

Having said that, three of the most commonly requested use cases require significant access to internal documents and internal organizational data in order to produce accurate and helpful results. These are:

- Content recommendation engine for supporting internal teams

- Assistant for strategy, corporate planning, and finance; and

- Gen AI as a product feature

The models for these use cases will likely need to be in-house and most likely on-prem in order to protect company data and IP, which means businesses will need to make the investment to build their in-house teams to support multiple models for multiple use cases.

5) Reality gap: limiting access to Gen AI is not seen as an effective way to stay on budget

92% of respondents are committed to growing budget inline with users and do not want to stay under budget by limiting access. 42% of respondents said they would grow budgets to accommodate more users and 50% will try to find savings through economies of scale.

However, achieving economies of scale through AI as a Service is virtually impossible because the price to use the service increases linearly with usage. Not only that, prompt engineering efforts are typically customized for each use case, so for businesses running multiple use cases, there are no time/energy savings. For enterprises running multiple models for various business units and use cases, the easiest way to attain economies of scale is through resource pooling: leveraging human capital that can build, maintain, and monitor the models across the business, as well as sharing compute power for serving.

Another concern that highlights the gap between hyped vision and reality is the willingness to give employees access to Gen AI (while that’s great) will lead to spiraling costs that may catch organizations unawares. Underestimating costs as usage goes up seems to be a common theme in the results of this global survey. This is likely to leave organizations in a very difficult spot in the future, one that might cause a reshuffling of resources and the need to supplement budgets mid-year to bridge the usage gap.

6) Caveat emptor: it’s astoundingly difficult for AI Leaders to predict the future hidden costs of Gen AI for their business

As we mentioned before, only a mere 9% of respondents are thinking about running costs; any organization not considering the total cost of ownership for Gen AI is in for a huge surprise when the bill comes due.

Meanwhile a third of respondents acknowledge that OpenAI’s APIs are slow/unresponsive/unstable, and the costs of the LLM models’ APIs are high and/or growing too fast, although 64% of respondents accept that it may cost more than $200/year/user. But compare this to the 50% of respondents who believe that 11-25% of all employees will be using Gen AI in year 1 of rollout, escalating to 26-50% of employees in year 2, and you can see how quickly the margins grow and how total costs will accumulate.

Download Our Research Report on the TCO of Gen AI

The bottom line from both reports? Organizations of all sizes seem ill-prepared to scale Generative AI. While they recognize running costs are high, they are not accounting for them in their forecasts and estimations of cost drivers. We believe organizations need to better align their Generative AI strategy with their business goals and operating budgets and allocate the necessary resources and governance in order to bridge the gap between their vision and reality.