One Platform, Endless Use Cases

Join 1,300+ forward-thinking enterprises using ClearML

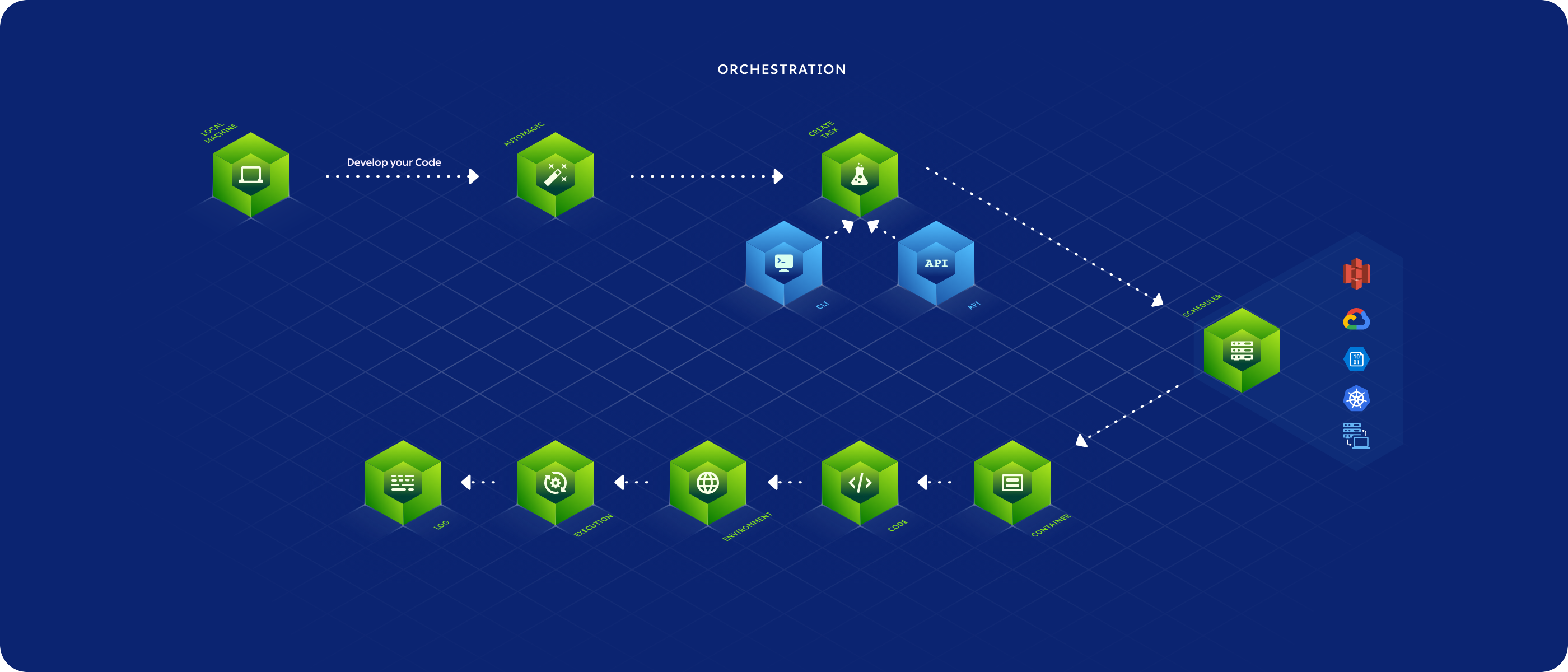

Launch from anywhere into your infrastructure -

no need to manually containerize every job

Full Utilization of GPUs

Maximize investments in compute infrastructure with built-in capabilities for multi-instance GPUs, scheduling, quota management, and job prioritization.

Empower Stakeholder Self-serve

Allow team members to do more themselves with more automation, greater reproducibility, and the ability to build custom UIs for team members to use.

Dynamically Pool Compute Resources

Enterprise customers can use ClearML to manage compute at scale by making all resources available to end users, whether on-prem, cloud, or cloud hybrid, and enable spillover onto additional cloud resources when additional capacity is needed.

Flexible Infrastructure Support

Access a control plane on-prem or in the cloud, supported for any combination of Kubernetes, Slurm, or bare metal, regardless of where machines reside.

Enterprise-grade Security

Enterprise customers get fully authenticated and encrypted communication channels paired with SSO and LDAP integration to ensure the right levels of access and visibility.

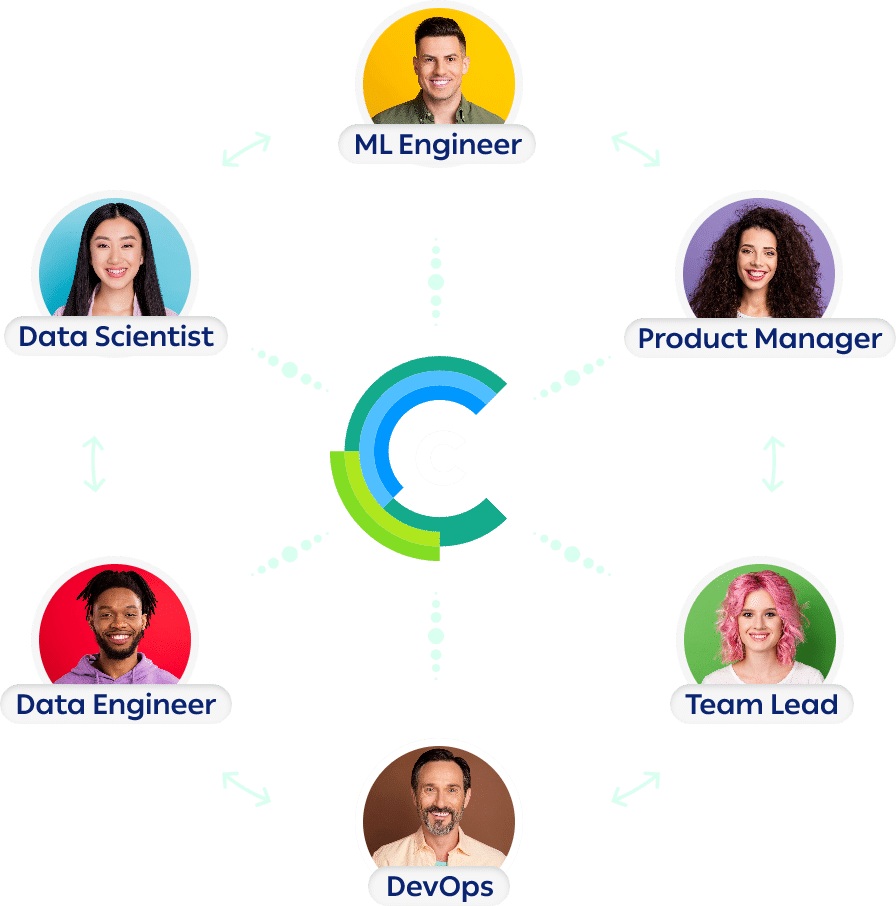

One Platform, Many Stakeholders

Data Scientists

Take your ML to the next level by automating any experiment with a click of a button. With ClearML, you can launch your local code in the cloud without any need for manual containerization. Any user will be able to run your experiments, change arguments, and train/text models directly from the UI.

ML Engineers

Easily manage compute access within the team with full visibility and resource allocation controls tied to budgets. Deploy and automate straight from your development environment with code, CLI, GIT, or Web UI and launch into your infrastructure, whether it’s a cloud environment, bare-metal, Kubernetes, or Slurm. With ClearML, there is no need to manually containerize every job, reducing container clutter and saving storage on single-use container registries.

DevOps

Enable self-service and access to compute without the admin of provisioning more machines, managing credentials, or tracking compute usage or budget. Optimize for compute at scale by dynamically pooling all compute resources (on-prem, cloud, or cloud hybrid) and autoscaling into AWS, GCP, or Azure as needed. Integrate ClearML with your company’s existing ML tools, ensuring fully authenticated and encrypted communications channels with enterprise-grade security.

Get GPU Partitioning for Free

Take advantage of our free fractional GPU capabilities for open source users, which allows DevOps to control GPU utilization in order to support multiple AI workloads. With this latest open source release, you can now optimize your organization’s compute utilization by partitioning GPUs, run more efficient HPC and AI workloads, and get better ROI from your current AI infrastructure and GPU investments.

4.2m+

tasks automated every month.

Integrations

Layer ClearML on top of your existing infrastructure or integrate it with your preferred scheduling tool:

See the ClearML Difference in Action

Explore ClearML's End-to-End Platform for Continuous ML

Easily develop, integrate, ship, and improve AI/ML models at any scale with only 2 lines of code. ClearML delivers a unified, open source platform for continuous AI. Use all of our modules for a complete end-to-end ecosystem, or swap out any module with tools you already have for a custom experience. ClearML is available as a unified platform or a modular offering: