By Vidushi Meel, Freelance Technical Writer

What is MLOps? Learn what Machine Learning Operations are, how it works, why it’s needed, the advantages of using MLOps, and why MLOps matters in this complete guide.

What is MLOps

MLOps (short for Machine Learning Operations) is the practice of integrating machine learning models into an organization’s software delivery process. MLOps uses a set of practices to ensure ML (machine learning) model reliability. MLOps involves collaboration between data scientists and programmers, who build and train the models, and IT professionals, who handle infrastructure and deployment of the models.

MLOps cycles encapsulate the entire machine learning model lifecycle, from producing ML models to their continued maintenance after deployment. In other words, MLOps bring together the development and operations of machine learning models.

MLOps Platforms

MLOps platforms make the orchestration and deployment of Machine Learning workflows easier. They provide services for the different components of MLOps workflows in one place and most of the time, through a user interface. This makes it much easier to incorporate an ML workflow in a new or existing product because much of the legwork is done by the platform.

ClearML, for instance, is an MLOps platform that can take your existing code and turn it into a fledged-out MLOps workflow with just a few lines of code. Logging, developing, and automating your existing code can be done end-to-end using a platform like ClearML. Examples of other popular MLOps platforms include Kubeflow, Amazon SageMaker, MLFlow, or Tensorflow Extended.

Why is MLOps Necessary?

Adopting an MLOps approach can positively benefit machine learning model creation by making the process of model development more streamlined. The three main components of why MLOps are necessary include decreasing time to deployment, increasing scalability, and reducing error percentages.

When used effectively, MLOps helps organizations develop, deploy, and maintain machine learning models with more ease than if it wasn’t implemented. Without MLOps, the process of developing and deploying machine learning models might be slow and error-prone, which could lead to delays in shipping the model and increased costs.

Some ways MLOps are necessary in industry environments:

- MLOps can help ensure the reproducibility and reliability of machine learning models: MLOps practices such as version control and continuous integration can help ensure ML models are developed in a reproducible way and that they are reliable in production.

- MLOps increases overall production productivity: Low-code environments that provide access to organized, focused data sets reduces the amount of data scientists needed to ship an ML model and allows them to move faster, which reduces time wastage and wastage of funds.

- Improving collaboration between data scientists and IT professionals: This isn’t the first time we’ve touched on this point, but it’s just that important. MLOps helps bridge any existing gaps between these two groups by providing a common set of practices and/or tools that they can use to work together more effectively.

- Improving the monitoring and management of machine learning models: MLOps practices such as monitoring and alerts can help organizations to more effectively monitor the performance of their machine learning models and to identify and resolve any issues that arise.

- Increasing sales for new products by producing machine learning models: Machine learning models can be used to make personalized recommendations to customers based on their past purchases and other factors, which can help sales and marketing teams identify new opportunities and upsell existing customers.

These points work together to accelerate time to deployment for machine learning models, which any company or individual can benefit from. Accelerating time to deployment allows organizations to realize the benefits of their machine learning investments more quickly, and reduces time-to-market for new products.

Who Benefits from Using MLOps?

Any AI-driven organization.

This report from McKinsey shows the importance of AI in general, and implies that better AI practices lead to positive results. Higher revenue increases, greater cost decreases, and increases in overall net profit for companies across all industries in 2021 can be attributed to effective AI integrations. Specific AI integrations, when done well, can greatly benefit company quarter-by-quarter progress.

Specific AI integrations include small ML models that make a big difference, like personalization models that show users a customized experience on shopping websites. Getting these small changes to a customer’s experience correct can increase their rate of returning, and therefore also their rate of repurchasing. MLOps significantly simplifies the ML model creation process, and can thereby benefit such companies.

Companies Using MLOps Today

MLOps is being utilized in industries at a fast-growing pace, especially as AI becomes more integrated in present and future technology.

Here are a few examples of how companies are using MLOps today:

- Booking.com: Booking.com has been able to scale ML models for destination and accommodation recommendations to their customers using MLOps workflows powered by Kubernetes.

- Genpact: NASSCOM and Genpact recently launched the MLOps playbook, a compendium of MLOps implementation framework and industry best practices, that pitches the usefulness of MLOps in industry.

- Daupler: Daupler used MLOps to automate their response management process. By using ClearML’s MLOps automation processes, Daupler was able to improve their response time and efficiency, and gain insights into their customer interactions. ClearML helped Daupler optimize the performance of their response management by aiding data collection, model training and deployment, and providing real-time performance metrics. MLOps through ClearML improved Daupler’s customer service pipeline by providing a way to analyze customer interactions and identify patterns and areas for improvement.

MLOps in Action

MLOps applications in day-to-day life are numerous and applicable anywhere you might see artificial intelligence integrations. MLOps can be used to automate processes for any ML model creation.

Real Life Applications

The crux of MLOps’ usefulness lies in its ability to be flexible and scale. MLOps can be seen in action anywhere from assisting the development of ML-enhanced sensors used in agriculture to improving efficiency for business management.

For instance, a retail company might need a machine learning model integrated in their website that shows repeat customers suggestions based on past purchasing history. MLOps can help automate the process of training, deploying, and monitoring the model, and ensure that it is compliant with relevant regulations.

MLOps has been used for fraud detection, where ML models are trained to detect fraudulent activity in real-time. This model application uses MLOps practices to assist in detecting fraudulent credit card transactions and fraudulent insurance claims.

Predictive healthcare is another lucrative and essential industry that uses MLOps to simplify the creation and implementation of necessary machine learning models, like those that predict patient outcomes or identify potential health issues before they become serious.

General Applications

These are just a few industry-specific examples of MLOps, but MLOps can be used anywhere in a number of creative ways.

MLOps can be leveraged by companies or individuals to create positive impacts on their ML timeline in general ways such as:

- Automating the roadmap for building machine learning models by streamlining the build, train, and development process

- Organizing infrastructure and platforms for running machine learning workloads (ex. No-code and low-code platforms are examples of MLOps in action)

- Implementing processes for version control, testing, and rollback for machine learning models

- Enabling collaboration and communication between different teams working on machine learning projects – MLOps can foster communication between teams across subject divides (ex. Sales and Engineering)

Components of MLOps

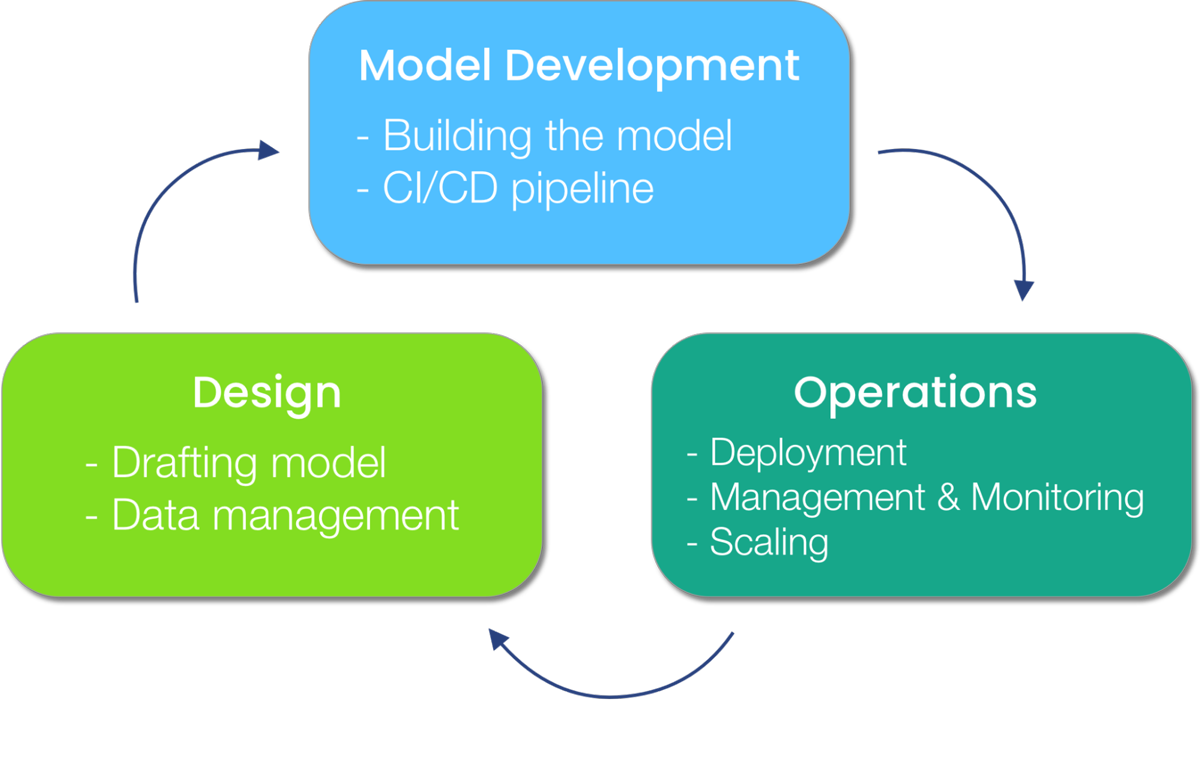

Another way to think about MLOps is to think of what it means to operationalize data science and machine learning solutions. MLOps utilizes code and best practices that promote efficiency, speed, and robustness.

MLOps works hand-in-hand with DevOps to integrate practices with ML systems and therefore produce better ML models, faster. Part of producing better ML models at a faster pace is creating the right environment that makes this happen. The right environment is data-driven, catalyzes cross-team collaboration, and uses feedback (both data feedback and real-life feedback) to get better.

So how can we do this?

MLOps components vary from system to system based on the specific needs of organizations using MLOps, but some components are relatively constant. Here, we’ll go over some common components of most MLOps systems.

1. Design

MLOps spans a variety of teams, and the design component is a great example of that. The first phase of MLOps workflows is dedicated to designing the right framework for the ML models to be incorporated and used in a business context.

There’s a lot of business-oriented questions asked during this first phase. For example, what is the goal of this machine learning model? How will it help drive sales, and what kind of data is going to be necessary to train it? How will that data be acquired?

In this step, data scientists work with sales teams to define the outline of the ML model before engineers go to create it. The design component aims to develop an understanding of the problem statement, the data availability and the business objectives. Then, teams might proceed to perform exploratory data analysis, feature engineering, or model selection to design the best machine learning model for the problem.

Drafting the Model

During the design phase, it is important to consider things like:

- How the model will be trained and evaluated

- What kind of data is needed

- How the model will be deployed in production

- What performance metrics will be used to evaluate the model

- What kind of monitoring and alerting will be set up

You’d want to create a “draft” for the machine learning model by considering questions like these and developing answers that are as in-depth as possible – the more that’s figured out before jumping into creating the model, the more productive and efficient creating the model will be when the project is passed to engineers.

Data Management

Data management can be included in the design component when building an MLOps workflow. The data management component of MLOps covers all aspects of data acquisition and containment. Data needs to be preprocessed, stored, and managed before creating models — data doesn’t have to actually be handled as of yet, but the guidelines for how data management will be done should be figured out.

2. Model Development

This component of the MLOps workflow handles the “meat” of creating the machine learning model. Here, the ML model is engineered and data is polished using the steps configured in the design phase. The focus is on building, training, and testing produced ML models.

Model development is done in notebooks like Jupyter or Google Colab for lower level projects, or IDEs that can handle robust Python (ex. PyCharm, VS Code). Common frameworks that you might see used to create ML models in the model development phase include Tensorflow or Pytorch.

During the model development phase, data scientists and engineers will:

- Develop model architecture

- Train the model

- Test the model

- Perform model validation

- Iterate if needed

Continuous Integration & Continuous Deployment (CI/CD)

Continuous Integration / Continuous Deployment, or CI/CD, is a software development practice that continuously integrates, tests, deploys code changes. There’s tons of development components that could be expanded on, but CI/CD is more industry-relevant than the others and good to know a little extra about.

In a developed MLOps workflow (in basic MLOps workflows, sometimes CI/CD isn’t incorporated), CI/CD is used to automate the process of building, testing, and deploying machine learning models as code changes are made. For example, a data scientist might make a change to a machine learning model and push the change to a version control system like Git. The CI/CD pipeline would then automatically build the model, run tests on the change to make sure the change is valid and doesn’t cause issues, and then deploy the model to production if the tests pass.

This saves a lot of manual labor time and is a great time investment which yields time saving in the future of the model’s usage.

3. Operations

The operations phase in an MLOps workflow handles the ML model after the bulk of it is already configured and built out.

This phase uses these common practices:

- Deployment

- Monitoring

- Model management

- Model serving

- Scaling

Of course, this isn’t an all-encapsulating list, and every MLOps workflow is different, so some might not even use all these parts – however, these are the most common practices seen across many MLOps workflows.

Deployment

Once the ML model is built, it needs to be efficiently deployed. Cloud-based platforms or on-site servers are used for deployment. CI/CD is also sometimes considered part of this step of the operations phase.

Model Management & Monitoring

Often there are multiple versions of models in production, so this step handles the management of those versions. Monitoring handles the tracking of an ML model’s performance in production, and negative changes such as model degradation and data drifting are specifically sought out.

This step can be difficult, but there are a ton of tools that make it easy for organizations to quickly incorporate model management/tracking/monitoring. ClearML, for example, offers a complete suite of features that make creating an MLOps workflow easy. Artifact and model tracking is an instance of an easily insertable feature that can upgrade your MLOps experience. By allowing you to create and access artifacts from your code, ClearML enables automatic tracking and versioning for all of your models.

Specific examples we’ll expand on include model versioning, model performance monitoring, and model drift detection (although there are many other features of model management and monitoring).

- Model versioning: A tool like DVC or Seldon can be used to keep track of model versions and ensure the right one is being used. Model versioning allows you to switch between previous and new model versions and easily compare their performance.

- Model performance monitoring: Metrics are collected – accuracy, precision, recall are stored and monitored. This step helps identify degradation (if you’re wondering what model degradation is and how to avoid it, check out this article.)

- Model drift detection: Continuously re-fit (periodically re-train the model to learn from historical data) models according to past model measurements.

Scaling

Many MLOps workflows accommodate scaling changes as part of the management & monitoring phase. This includes scaling resources up or down as needed to handle changes in workload. This is done by changing parameters like CPU and GPU usage, using container systems (ex. Kubernetes) to scale up or down data-processing tasks, and vertical/horizontal scaling.

How is MLOps Different from DevOps?

DevOps is a combination of software development (Dev) and IT operations (Ops). There are numerous similarities between MLOps and DevOps because they both focus on streamlining the process of deploying a machine learning model – this can make it hard not to use the terms interchangeably.

Key differences between MLOps and DevOps:

- Goal: DevOps focuses on the development and deployment process, while MLOps focuses on the development and deployment process as well as the management and monitoring of the ML model post-deployment.

- Industry differences: DevOps is more general than MLOps. DevOps doesn’t always focus on the challenges of machine learning like MLOps does, and MLOps is more fitted to handle machine learning-specific tasks by using tools like Jupyter notebook, TensorFlow and more. Although these can also be used in DevOps, they aren’t all the time and it’s less common.

- Collaboration: MLOps requires collaboration between data scientists, IT professionals, and salespeople to function because of the various areas of expertise required (ex. Design phase uses business expertise). DevOps, meanwhile, usually requires more assistance from developers and engineering operations teams and has less need for other areas of expertise. It still has a need for them, but more often than not, the need is less than in MLOps.

How is MLOps Different from ModelOps?

ModelOps is a relatively new field that deals with enterprise operations and governance for ML models. MLOps and ModelOps both assist the model from development to management, but have different approaches in doing so. ModelOps uses way more business input than MLOps and has a greater focus on customer experiences used to shape the machine learning model. For that reason, MLOps is perceived as more technical than ModelOps.

Summary

MLOps is an ever-growing field in AI that helps AI-driven solutions go to market faster. If you’re looking for an MLOps platform that can help your product or business incorporate machine learning, consider ClearML – an open-source platform that automates MLOps solutions for thousands of data science teams across the world.

Get started with ClearML by using its free tier servers or by hosting your own. Read the documentation here. You’ll find more in-depth tutorials about ClearML on its YouTube channel and they also have a very active Slack channel for anyone that needs help. If you need to scale your ML pipelines and data abstraction or need unmatched performance and control, please request a demo. To learn more about ClearML, please visit: https://clear.ml/.