For a quick refresher on what hyperparameter optimization is and what frameworks and strategies are supported by ClearML out of the box, check out our previous blogpost!

TL:DR;

- ClearML can track anything without changes to your code because it automatically keeps track of arguments and their corresponding outputs.

- Having access to the inputs, metrics and code environment itself allows you to optimize effectively anything, any model, any black box.

- With the optimization itself happening outside of your code, as multiple ClearML tasks, it’s easy to execute these tasks in parallel on multiple machines if required.

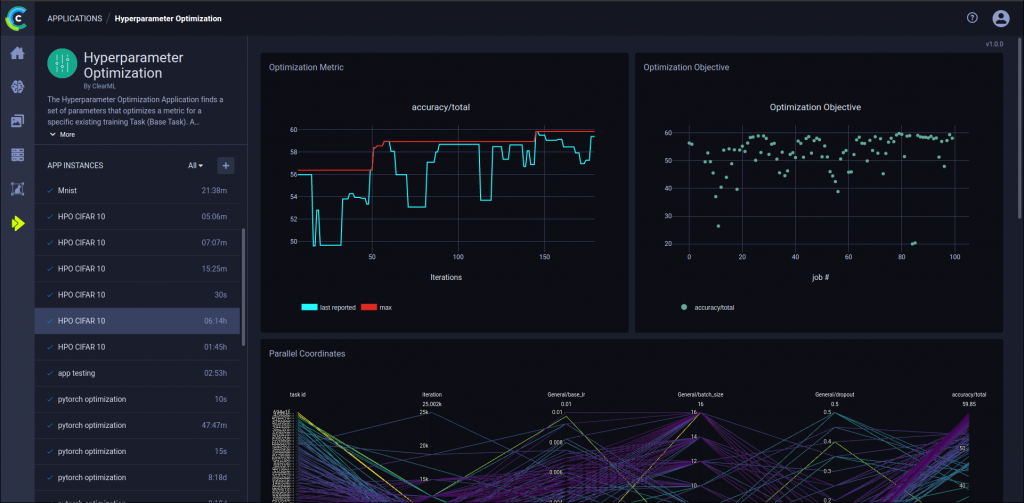

- Use the clean new UI to get an overview of the Hyperparameter Optimization process while it’s running and easily find the best performing models.

Dummy Model Creation

To show just how simple it can be, consider an example for a dummy model:

We did a few things here, so let’s break it down

First of all, the 2 magic lines. These lines will kick start the experiment tracking and provide us with the ClearML task object.

Next, we create our hyperparameters. In this case, we put them in a dictionary, but you can put them anywhere. Class, dict, config file, command line arguments, whatever you prefer. Then connect it to the task, to make sure they’re logged.

ClearML automatically captures metrics reported to tools such as TensorBoard, Matplotlib, Python Argparse, Click, Hydra … In those cases, no additional code is even necessary!

Now we create a dummy model, just for demonstration purposes.

This can be anything and that’s the real power of ClearML’s approach to Hyperparameter Optimization!

The only prerequisites are that it has input parameters we want to manipulate and output metric(s) we want to optimize.

In this case, to keep things dummy, the output of our “model” will just be 10 times the amount of epochs. If only it were that easy for real models…

We’ll also immediately run it and get the output on the following line.

Finally, we need to report the model output to ClearML, so it can tell the optimizer how this hyperparameter combination worked out and possibly adjust its strategy. We do this by reporting it as a scalar to the task’s logger. We can also log everything else of interest, such as plots, images, comments, other metrics etc. But we’ll keep it simple for now. Remember that for a lot of frameworks, ClearML will log these scalars out of the box! Check the documentation for more information.

Getting the task into ClearML

All that’s left to do now, is to run this task once to get it into the system. Consider it a “template” task for hyperparameter optimization. Of course, in ClearML fashion, we can run it either locally or send it remotely to one of our agents.

And… that’s all you need to start optimizing. From here, you can easily tell ClearML to repeat this task, but with different parameters instead. We can do that manually by cloning the task and editing the hyperparameters ourselves.

But manually editing tasks is not the way to go in MLops, is it? So we’re automating it and letting ClearML do the cloning, we just have to tell it the parameter ranges.

Automated Hyperparameter Optimization straight from the interface

For when you don’t even want to think about it.

With our dummy model task in the system as a template, it’s super easy to set up an HPO task around it. In the ClearML interface under Applications, you’ll find the HPO wizard.

After clicking on the plus sign to launch a new HPO task, you find this form that allows you to quickly and easily set up the parameter ranges and other important settings.

Some important information that needs to be filled in are:

Base Task ID: this is the ID of our “template” task

Optimization Strategy: Gridsearch and Randomsearch are available, but they should be avoided if possible. Use Optuna or HpBandSter (BOHB) instead. They allow you to simultaneously optimize many hyperparameters in a very efficient way by relying on e.g. early stopping and smart resource allocation. We’ll cover the ins and outs of these optimizers in a following blog post.

Title & Series: the title and the series of the scalar that we want to optimize for. In our case, this is our “model_output”

Discrete or uniform parameters: these are the hyperparameters we want to manipulate.

Note: check the template task for their exact name and prepend the section name.

Here we’d write: General/epochs to mean the epochs hyperparameter of 10.

After clicking “Launch New” you’ll be guided to a dashboard that will serve as an overview of your optimization process. You’ll be able to see a leader board of the different tasks, a graph showing the main metric to be optimized over time and much more.

Launching our HPO task will create a whole bunch of tasks in our project, all of which are clones of our “template” but with different hyperparameters injected. So in our project, we’d have 1 template task, 1 optimization task and a whole bunch of clones of the template task originating from that optimization task. All of these can of course be compared, ranked, sorted, etc. or you can use the overview dashboard we saw above.

Automated Hyperparameter Optimization in the Python SDK

For when you want more control

Running HPO straight from the dashboard is pretty cool, but sometimes you want more control and flexibility. So obviously we can find the same functionality in the python SDK, too.

The HyperParameterOptimizer class takes the same arguments as the dialog box above, so not much new there, but now that it’s in code, we can even go further with our automation. For example, you could position this HPO process as part of a ClearML pipeline that handles a completely automatic retraining loop.

The code can run on your local machine and spawn new tasks in the queue for the agents to pick up and process. To do this, add the following lines to the script. They will monitor the progress and report back on how it’s going.

If you want to, you can also easily run the optimizer task itself remotely by using this code instead:

If you want to dig deeper, don’t forget to check out our documentation pages on the subject 🙂

Sit back and relax

While your agents do the work!

When the task is completed, you can see the top performing task in the top right leaderboard along with its respective parameters. You can easily click on the task id too to go to the detail view and check out e.g. some debug images or console output to make sure everything is indeed performing well.

If you made it through this blog post: congrats! Hopefully, you now see the potential in the approach that ClearML has taken to HPO. If you want to dig deeper, there are GitHub examples for you to sift through. And if you’re feeling ambitious, feel free to implement your own optimizer and open a pull request!