Originally Published by Ryan Greenhalgh – Republished by author approval

AI models get smarter, more accurate, and therefore more useful over the course of their training on large datasets that have been painstakingly curated, often over a period of years.

But in real-world applications, datasets start small. To design a new drug, for instance, researchers start by testing a compound and need to use the power of AI to predict the best possible permutation. But the actual datasets are too small for conventional AI, leaving the models without the raw material on which to train.

At DeepMirror we’ve pioneered new AI training algorithms that learn to predict from small datasets. Our new training algorithm, called DeepMirror Spark, reduces the amount of data required to train AI for computer vision by 10-100x (check out how this works for biomedical images here (https://deepmirror.ai/2021-12-09-spark-instance-segmentation/).

The jump from training an AI model to delivering the end result is not simple. In fact, most machine learning models never make it into production [1]. Yes, that’s right. All those hours clocked by talented engineers don’t get used by customers in the real world. When I first heard this statistic, I was shocked – these models are the most valuable part of research, so how could this be the case? In this post, I’ll address some of the issues we often face when transitioning from machine learning research to production. One of the benefits of working at a start-up is that we can establish good processes from the outset to develop flawless internal machine learning and deployment workflows. This is what I’d like to share.

For each new project. we need to manage multiple trained models, grow our annotated datasets, compare the performance of different models, benchmark them against different datasets, and deploy the winners for our users. Thus, to optimise our research to production workflow, we face these five engineering challenges:

- Log, monitor, and share experiments

- Data versioning and storage

- Reproduce and replicate

- Autoscaling GPU compute

- Serving and deploying

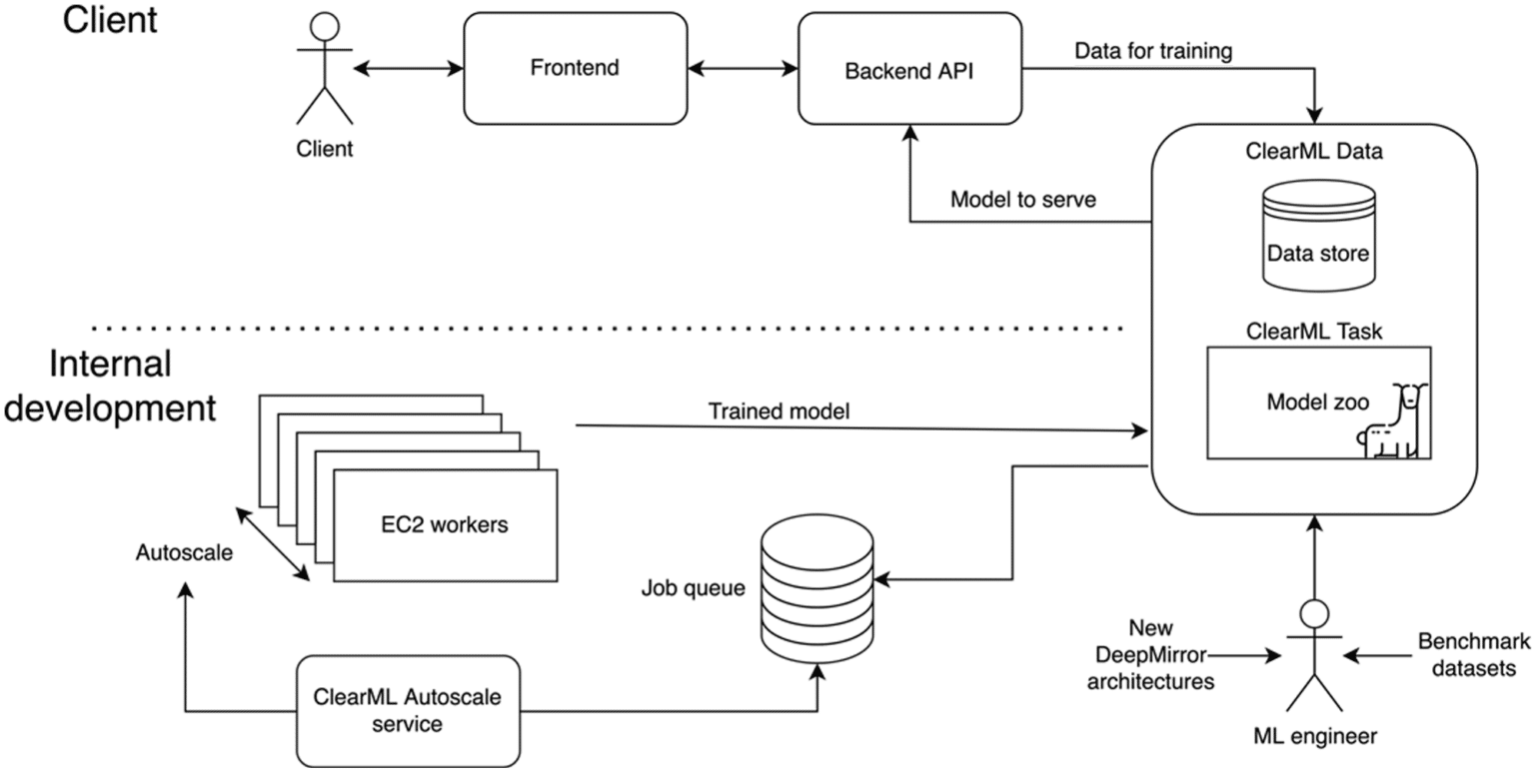

It would take a lot of time and money to design a system to manage each of these machine learning operation (MLOps) components from scratch. After researching several MLOps providers, we found that ClearML significantly outperformed other offerings. ClearML is a Python-based MLOps platform that provides the tools to solve our research to production challenges outlined above. We were mainly interested in having the infrastructure automatically monitor and log the training of models and a simple deployment step. Figure 1 summarises our machine learning lifecycle and systems design. Here, there are two main processes happening:

- The machine learning team can create and design different models, train the models with scalable compute, and benchmark the models across the team.

- The client can interact with the backend API by submitting data and using models to make predictions on their data. It is also possible to train new models, which is handled by the same framework used by the machine learning team.

Let’s take a look at how we used ClearML to solve the five challenges mentioned above.

Log, Monitor, and Share Experiments

At DeepMirror, we want to develop new technologies to rapidly train our AI, and deploy the best performing models to clients. To select the best performing models, we need to quickly compare the performance of different models and benchmark the models against other datasets. This requires us to log, monitor, and share results from various experiments and across research teams. Without logging and monitoring, experiments can lie redundant on local machines, or researchers will have to send files back and forth slowly: accumulating technical debt.

ClearML made it is easy to log and monitor experiments. All we needed to do to set up automatic logging was to initialize a ClearML task at the start of our training script:

Now all the terminal outputs and Tensorboard outputs were saved remotely for other engineers to view on the ClearML UI.

Data versioning and Storage

For internal ML research, we need engineers to be able to efficiently access datasets to create new models. During this process, it can be difficult to manage access to collections of datasets between engineers, giving rise to data versioning and storage challenges.

In addition to sharing the logs and monitoring experiments across the ML team, it is also important to be able to replicate datasets when training on different machines. ClearML makes it easy to upload and replicate datasets with a few lines of code. To upload a new dataset, our engineers can push it to our cloud storage by running the following code:

It’s also easy to replicate the dataset within a task:

This makes it easy for different ML engineers working on different machines to programmatically retrieve datasets locally.

Reproduce and Replicate

During the development process we need to be able to replicate older versions of our code. For example, a researcher might want to retrain an old model with a new hyperparameter, or on a different dataset. Usually, this would require the engineer to replicate the dependency environment and datasets. Ensuring that the correct environment is set up can often be a time sink for the engineer.

The ClearML UI makes it easy to replicate and reproduce different experiments (tasks). A task can be cloned and then retrained or have specific hyperparameters changed. This makes it easy to run experiments locally, without even needing Python installed – simply through the browser.

Autoscaling GPU compute

Additionally, we need to be able to train multiple models to benchmark model performance. To get fast turnaround, it is critical to have access to a large GPU compute resource. Running many GPU instances on a cloud provider can be expensive, so ‑ ideally, we want to have just the right number of resources on demand. To meet this requirement, we need to have an autoscaling GPU compute.

One of the features that we really love about ClearML is the ClearML Agent and autoscaling service. The ClearML Agent can be installed on our local GPU machines, and when a new job is sent to the queue (either by a ML engineer or client over the browser) the Agent will receive and execute the task locally (illustrated in Figure 1). At first, we used a few on-premise machines to train models, but quickly ran out of compute resources during surges of demand. ClearML saved us again – this time with their Autoscaler. We used the AWS autoscaling service from ClearML to monitor the number of jobs in the task queue and spin up EC2 GPU instances with ClearML Agents installed to execute all the tasks in the queue. The autoscaling feature significantly accelerated our ML model development and deployment.

Serve and Deploy

A key component of our offering is to provide clients the ability to train their own custom models. To get this done, we need a system that allows crosstalk between ML engineers and clients with shared resources. Once our team has developed a working model or a client has trained a model, we need to be able to serve and deploy the model back to the client.

Serving models in this way enables clients to make predictions on their data. To do this, we simply use ClearML on our backend to cache a local copy of the model from a specific task in just a few lines of code.

In summary, ClearML has enabled us to smash through the research-to-production barrier. The platform makes it easy for our research team to quickly get models into production while monitoring their performance, without worrying about GPU resources. The team at ClearML and their community have even been great at providing support on the community Slack. Using ClearML as an all-in-one ML-Ops solution has saved us time and money and will continue doing so in the future as we can focus on developing our core technology.

Reference