By Katherine (Yi) Li, Data Scientist

10 years ago, it would be ridiculous for people to believe that someday they would be able to use their faces to unlock their phones. That’s because it had been extremely difficult to create cartoon characters without profound drawing skills – but now we can easily turn photos into cartoon characters. Struggling with parallel parking? No worries, because self-parking systems are becoming standard equipment in vehicles. None of this would have been possible without the breakthroughs in computer vision.

In this article, we will be diving into the world of computer vision and discussing everything you need to know about computer vision basics:

- What is computer vision?

- How does computer vision work?

- Real-world applications of computer vision

- Benefits that computer vision could bring

- Concerns around computer vision and their corresponding best practices

Now, let’s dive in.

What is Computer Vision?

Computer vision is a field of Artificial Intelligence (AI) that focuses on developing algorithms and models that enable computers to interpret and understand visual information from the world. To put it simply, computer vision is the automation of human sight, giving machines the sense of sight that allows them to “see and explore” the world.

Generally speaking, Computer Vision includes tasks such as

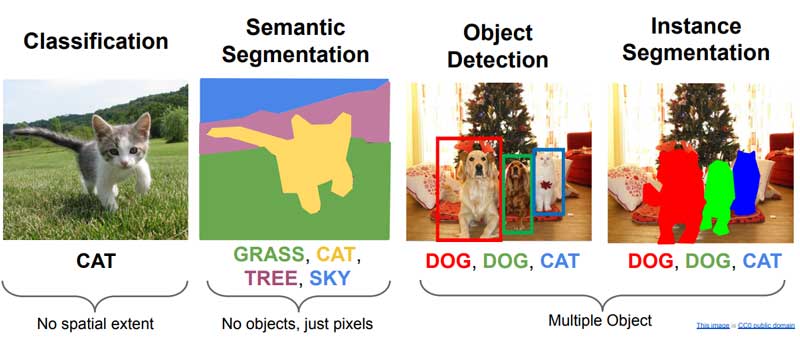

- Image recognition/classification: recognizing specific features in an image, such as faces, cats, or traffic signs;

- Object detection: identifying and locating objects within an image or video;

- Image segmentation: dividing an image into multiple segments, with each segment corresponding to a different object or region;

- Image formation/generation: creating an image or transforming an existing image into a different style.

As a technology that has grown in leaps and bounds over the past few years, the global computer vision market size was valued at more than $12.50 billion in 2021 and is expected to grow at a compound annual growth rate (CAGR) of more than 12.5% during 2022-2026. The market is expected to continue to increase as AI becomes more of an integrated part of our daily lives.

How Does Computer Vision work?

You may wonder: how do we make a computer “see” things as human vision does? Well, to teach the machine to recognize visual objects, it must be trained on hundreds of thousands of visual examples; hence, a computer vision task typically begins with the acquisition of visual data, such as an image or video. This data is then preprocessed to remove noise and improve its quality. For example, if we want to train a machine to recognize human faces, we need to feed in hundreds, or even thousands, of images for it to learn, especially those images taken in low light or with different angles.

Next, image processing techniques are applied to the data to extract useful information. For humans, it’s simple to understand the context or semantic meaning of an image, but for machines, images are nothing but a huge collection of pixels. Machines are trained to detect and learn these pixel values. This process is accomplished by a deep learning algorithm – Convolutional Neural Networks (CNN).

In a nutshell, when an image is processed by a CNN, the layers of the network extract various features from its pixels, starting with basic characteristics, such as edges and gradually moving deeper into more complex features such as shapes, and corners. The final layers of the network are capable of identifying specific objects such as car headlights or tires.

Once these features are extracted, the CNN model will output a matrix of numerical information representing the probability of a specific object being present in the image. It achieves this by learning to extract features that are the most useful for identifying the object. Through many rounds of iterations, the network continuously evaluates the accuracy of its predictions, and eventually the predictions become more accurate.

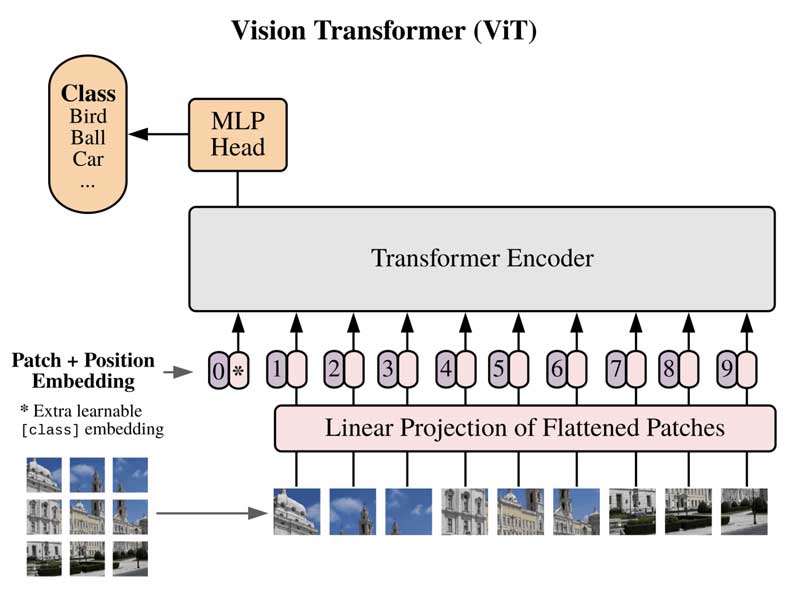

Although CNN is still the go-to architecture in the world of computer vision, the application of transformers in image classification offers a new approach. ViT, short for Vision Transformer, is a deep learning model that uses a transformer-based architecture to process images.

Introduced in 2021, ViT was inspired by the success of transformer models in Natural Language Processing (NLP). The key idea behind ViT is to treat an image similar to a sequence of tokens, where each token corresponds to a patch of pixels in an image. These patches are then flattened and fed through a transformer network, which learns to process the sequence of patches and make predictions.

If you are interested in learning more about ViT, please check out this paper.

In summary, computer vision works by using a combination of image processing techniques and machine learning algorithms to detect, analyze and predict visual objects.

Real-world Success Stories with Computer Vision

Now, we have gained a basic understanding of how computer vision works technically, let’s move on to some specific applications that showcase how it could potentially improve our lives.

- Image and video analysis: Computer vision is used to analyze images and videos to extract useful information.

- Zoom, one of the most popular video conference companies, leverages Computer Vision to identify and maximize the resolution of the most relevant sections of a video, usually human faces rather than objects in the background. These algorithms are used to improve video quality and enhance user engagement.

- Robotics: Computer vision is used in robotics to allow robots to perceive and understand their environment, which enables tasks such as navigation, grasping, and manipulation of objects.

- In March 2021, Amazon Web Services, Inc. released “Amazon Lookout,” – a computer vision service for monitoring products during manufacturing. The goal of Amazon Lookout is to simplify the process of designing, optimizing, and implementing computer vision models, making it more accessible to a wider range of manufacturing companies. Additionally, it aims to assist clients in incorporating computer vision technology into their own facilities.

- Autonomous vehicles: Computer vision is used in autonomous vehicles to enable tasks such as object detection, lane keeping, and traffic sign recognition.

- An article published by Aventior, Inc. in May 2021 emphasizes the key role that computer vision plays in making self-driving cars safer for passengers. The use of facial recognition software in combination with sensor technology allows for quick identification of cars, people, and other objects on the road. Furthermore, by creating a 3D map through real-time image capture, together with identifying low light conditions with algorithms, computer vision improves the reliability of self-driving vehicles.

- Medical imaging: Computer vision is used in medical imaging to assist in tasks such as image-based diagnosis and treatment planning.

- Zebra Medical Vision, an Israeli startup founded in 2014, uses AI and computer vision to analyze medical imaging in real-time for early disease detection, assisting radiologists in making accurate diagnoses. They have landed FDA clearance and have been partnering with several health systems, including Mayo Clinic and Mount Sinai Health System.

- Surveillance: Computer vision is used in surveillance systems to detect and track people or vehicles, and to recognize faces or license plates.

- In July 2022, IDEMIA, a multinational technology company specializing in facial recognition and other computer vision software and products, announced a partnership with the U.S. Department of Homeland Security. This partnership is expected to enhance the department’s activities such as identifying suspects during criminal investigations and travelers at airports through the deployment of facial recognition tools, as well as streamlining security at checkpoints.

- Agriculture: Computer vision is used in precision agriculture to optimize irrigation and fertilization.

- AgroScout uses drone cameras equipped with computer vision technology to recognize various agricultural conditions and information, such as crop health, aerial views of farmland, and soil conditions using geo-sensing capabilities. Techniques like semantic segmentation and image annotations are also used to detect and identify specific crops and pests.

- Retail: Computer vision is used in retail to track customers and products, and thus to improve the shopping experience.

- Many fashion brands, such as Levi’s, Tommy Hilfiger, and LuluLemon, offer virtual fitting room applications. With the use of computer-vision-enabled technology, potential customers are able to try on garments, shoes, or accessories before they make a purchase. This feature is incredibly convenient, allowing for easy visualization of different colors and styles of items in real time.

- Gaming: Computer vision is used in gaming for tasks such as gesture recognition and facial expression recognition to improve interactivity.

- The Microsoft HoloLens delivers a ‘Mixed Reality (MR)’ experience allowing gamers to see holographic images that appear to interact with the real world. A popular MR game, Young Conker, employs object recognition to identify furniture within the room where it is being played. By recognizing the furniture, the game creates a unique playable area for each room, using the detected furniture as platforms for the character Conker to jump on.

Benefits of Computer Vision

As we can see, computer vision already has a wide range of uses across every sector of the economy. Just like we use our eyes to see and understand the world, so do machines with Computer Vision. But they are able to process more volumes of data at a much faster speed, and this is just one benefit that this technology brings. Here are more,

Automation to save cost, improving efficiency and productivity

Computer vision allows for automation of tasks that would otherwise have to be performed manually, such as sorting and packaging in warehouses and distribution centers, allowing for faster processing times and reducing the need for human labor. This, in turn, can save time and increase work efficiency.

Increased accuracy and support better decision-making

As we discussed throughout this article, computer vision can be used to extract and analyze valuable information from images and videos. In the medical field, for instance, data sources could be medical images such as X-rays, CT scans, and MRI images. By analyzing these images, computer vision helps medical professionals detect diseases, injuries, or other conditions early on and improve the accuracy of diagnosis and treatment decisions.

Improved safety

When used in tasks such as surveillance and self-driving cars, computer vision helps to improve safety. Surveillance powered by computer vision can automatically monitor public spaces for potential safety hazards. This could provide a faster response to emergency situations.

Better customer experience

Virtual try-on allows consumers to virtually try on clothes before making a purchase; Self-checkout enables consumers to scan and pay for items without the need for a cashier; Image-search allows consumers to search for products by uploading an image. All of these can make the shopping experience more enjoyable.

Concerns around Computer Vision and Best Practices

While celebrating the tremendous benefits and efficiency that this technology brings, there are still some fundamental issues that we need to address. Let’s look at a few in the following table, along with some best practices associated with each.

| CONCERNS | BEST PRACTICES |

|---|---|

| Privacy concerns: Facial recognition technology in particular has been the subject of much debate due to concerns about its use by law enforcement and governments. | Consider ethical implications: It's important to consider these ethical implications when developing and deploying the technology. |

| Bias and discrimination: Computer vision systems can be trained on biased data, which can result in biased and discriminatory outcomes. | Use diverse and representative data: It's important to use diverse and representative data and representative data to ensure that the system can accurately identify and recognize different individuals, objects, and patterns. |

| Lack of transparency: Computer vision systems can be difficult to understand or interpret, and it can be hard to know how they make decisions. This lack of transparency can make it difficult to ensure that the systems are fair and unbiased. | Use appropriate evaluation metrics: It's important to use appropriate evaluation metrics to evaluate the performance of a computer vision system. These metrics should be chosen to enhance the explainability of the model, so that stakeholders can understand how it works and how decisions are made. |

| Data breaches and cyber security: Computer vision systems process a lot of personal data, which can be vulnerable to hacking or other cyber-attacks. If not properly secured, it can lead to data breaches and the leakage of sensitive information. | Secure your data: It’s important to ensure that the data is properly secured to prevent data breaches or leakage of sensitive information. Therefore, when designing and building a computer vision system, a data storage plan is warranted. |

| Inaccuracies: No algorithm is immune to inaccuracy. Computer vision systems will inevitably make mistakes or wrong decisions. This could potentially lead to extremely negative impacts on certain tasks. For example, false positive identifications by a surveillance system would cause severe trust issues regarding civil liberties. | Regularly update the algorithms: As new data and new techniques become available, your existing computer vision systems can become outdated. It’s important to continuously monitor and track the model to catch any issues that may arise, such as biases. Also, regularly retraining the model could ensure that it stays accurate and relevant. |

A bonus best practice that I would like to point out is that computer vision is an extremely complex field, and technology itself won’t take us anywhere without the industry context. Therefore, it’s important to collaborate with domain experts in a certain field to ensure that the model is designed, developed, and deployed properly. Only with these in mind can we utilize computer vision in a way that maximizes the benefits and minimizes the risks of this technology.

If you’d like to get started solving your own computer vision use cases, you can get started with ClearML by using our free tier servers or by hosting your own. Read our documentation here. You’ll find more in-depth tutorials about ClearML on our YouTube channel and we also have a very active Slack channel for anyone that needs help. If you need to scale your ML pipelines and data abstraction or need unmatched performance and control, please request a demo. To learn more about ClearML, please visit: https://clear.ml/.

Conclusion

Thanks to the development of deep learning algorithms and the availability of massive datasets, we are able to teach computers to “see and understand” objects. Computer vision is such a rapidly growing field that it is finding applications in various industries. If you are interested in learning more about Computer Vision, you can get a list of great resources in this GitHub repository. We hope this article has given you some inspiration and guided you in the quest of changing how computers “see” the world!